What tens of thousands of AI prompts reveal about engineering teams

What separates teams that build agentic workflows in Port from everyone else? We analyzed 10s of thousands of prompts to Port AI to find out.

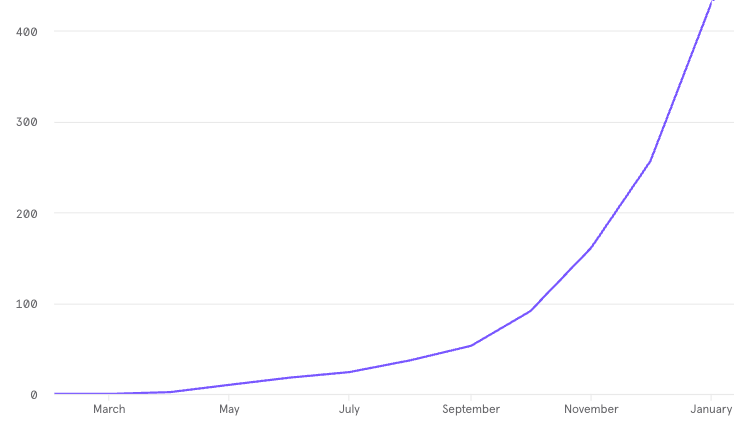

10 months ago, we started releasing AI features in Port. Since then, AI has become an essential part of how teams work in Port. As you can see, AI adoption in Port organizations has taken off.

Port acts as the system of record and system of action for our customer's engineering teams, for both humans and agents.

As a system of record, we know about their services, ownership data, deployment history, team structures, and more.

As a system of action, we know what actions engineers are triggering, what workflows are being run, and more.

Now that Port has an AI layer, engineers and agents are interacting with Port in a new way. Instead of looking at data in a dashboard, they ask for it in natural language. Instead of running an action manually, they ask AI to run it for them.

In the past 10 months, platform and software engineers at large engineering organizations have prompted it tens of thousands of times. It's connected to their most critical systems and they ask complex operational questions and trigger meaningful actions.

So we decided to study exactly how they are using it.

What is AI in Port?

If you're already familiar with Port's AI capabilities, feel free to skip this section.

If not, here's a short history of it:

- Port launched Context Lake, a way for AI agents to query your organizational knowledge and take action with the proper governance in place

- We then launched AI agent widgets, embeddable chat widgets that have the context of the portal

- After that, we released our remote MCP server, a general AI assistant, and a Slack app

- We also launched a few specialized AI features like integration helpers (to help bring your data into Port) and automatic data discovery (to help enrich your software catalog)

Now you're up to speed on what AI capabilities Port has. (But by the time you read this, we probably have more)

What we wanted to know

We had two questions going in:

1. What are engineers trying to do with Port AI?

2. Do teams change how they use Port AI over time?

We classified each query by what the engineer was trying to do. Were they trying to understand their environment, manage infrastructure, fix a security issue, or something else entirely? Then we looked at how those patterns shifted over time.

Here are our findings:

Finding 1: 33% of prompts ask about scattered engineering knowledge

33% of all AI queries are what we call "context gathering".

Questions like "Who owns the payment service?" and "Show me the list of my repositories" and "How do I deploy to production?"

In our research, we classified a prompt as context gathering when it asked for information that should already be accessible in Port: service ownership, team structures, deployment processes, documentation locations.

Let's explore why this use case is so strong through an engineer's first few months at a company:

Week 1 - Hello world

You're given access to a bunch of tools at various stages of the SDLC you may or may not have used before. You're assigned to a team and need to figure out what they are responsible for. You have an endless list of questions, but you don't want to interrupt your teammates too much.

"What repos does my team own?"

"What services are in my team's domain?"

"Where do I start?"

Weeks 2–4 - Hello unfamiliar deployment process

By now, you've gotten your first ticket. It's probably a tiny feature or small bug fix. But it's on a service you've never touched before. So now you have a whole new set of questions like "What does this service depend on?" "What's the deploy process?"

The answers exist, but they're spread across Confluence, Notion, Slack threads, and someone's head. You might even get different answers from different teammates.

Month 2 - Hello real work

Congrats! You just got your first big ticket. But it touches a service your team doesn't own. Now you've started to expand your scope and touch other people's code, which means gathering brand new context. If you had 30 questions in week 3, now you have 300.

"Why was this built this way?"

"Is there anything I should know about before I change this?"

The truth is, this part never ends. Engineers are constantly gathering context, just in different parts of the codebase or SDLC. This is why we haven't seen queries of this kind decline over time, even in the same company.

Every one of these questions is answerable by AI, as long as the context is there. And if you have an IDP, it already is.

Finding 2: User trust in AI-feature accuracy is built gradually

We looked at how queries evolved over time within individual organizations, and the same pattern appeared everywhere. Teams never started with complex questions. They start with the simplest thing they can think of and get more and more complex. Like this:

Month 1: "Show me my repositories."

Month 2: "Who owns the auth service?"

Month 6: "What is the tech team ID for the Network Access team?"

Month 10: "How many deploys were performed last month on service X to prod?"

The AI could of course answer the month-10 question on day one (assuming the context is there). So it's a matter of trust. The first question is a test. The engineer already knows the answer, or can easily verify it. If the AI gets it right, the next question is slightly harder. If that's right too, they start relying on it for things they can't easily check themselves.

We saw this in how teams accessed AI, too. Early on, most usage came through a chat widget. Someone types a question and reads the answer. A few months in, we saw more questions asked through the Slack app or Cursor via MCP. A few months after that, the most active teams were calling the AI API directly, embedding AI into their own internal tools and workflows.

Teams that tried to skip the chat phase and start with autonomous agents tended to get stuck. The teams that let engineers build trust at their own pace got to automation faster than the ones that tried to skip ahead.

Finding 3: Platform teams that continuously improve their software catalog see faster engineer adoption

We encourage our customers to take a product management mindset while they build in Port. After all, they're building a product for their engineers. Taking a product mindset means they're in a continuous feedback loop:

- Ask engineers where they get stuck

- Analyze their input

- Decide which problems to solve

- Build solutions for them

- Deliver them to engineers

- Go back to step 1

So we asked: Do teams that take this product mindset get better results from their AI?

I want to tell you about one customer that has more experience than anyone in this and the results they got.

At the beginning of their journey building a developer portal, they built a quick MVP, and told engineers they could start using it. Not surprisingly, most engineers didn't use it. Their engineers had a certain muscle memory for their existing tools and couldn't make the switch.

So the platform team building Port started over. They physically sat down next to the engineers and just watched them work. They realized that what their engineers wanted most was a lightning-quick way to see exactly what they needed from where they were already working.

So they rebuilt, adding more data sources, refining the data model, and creating more relevant self-service actions.

And as the catalog got richer, something clicked for engineers. They started using Port AI substantially more because the answers they got were substantially better.

In his words: "Agents magnify the best practices you have or the lack of practices you have."

Best practices only come from iteration.

So that's what we saw in our most successful teams. They didn't build once and walk away. They ask what engineers need, build it, and then ask for feedback. And repeat.

Finding 4: Our leading customers are building agentic workflows

Most engineers interact with AI through a chat interface. Type a question, get an answer. That accounts for the majority of AI usage.

But 12% of queries are for something else. These queries happen during workflows where the AI query happens as a result of some trigger (not a question from a person).

Here are some examples of what I mean:

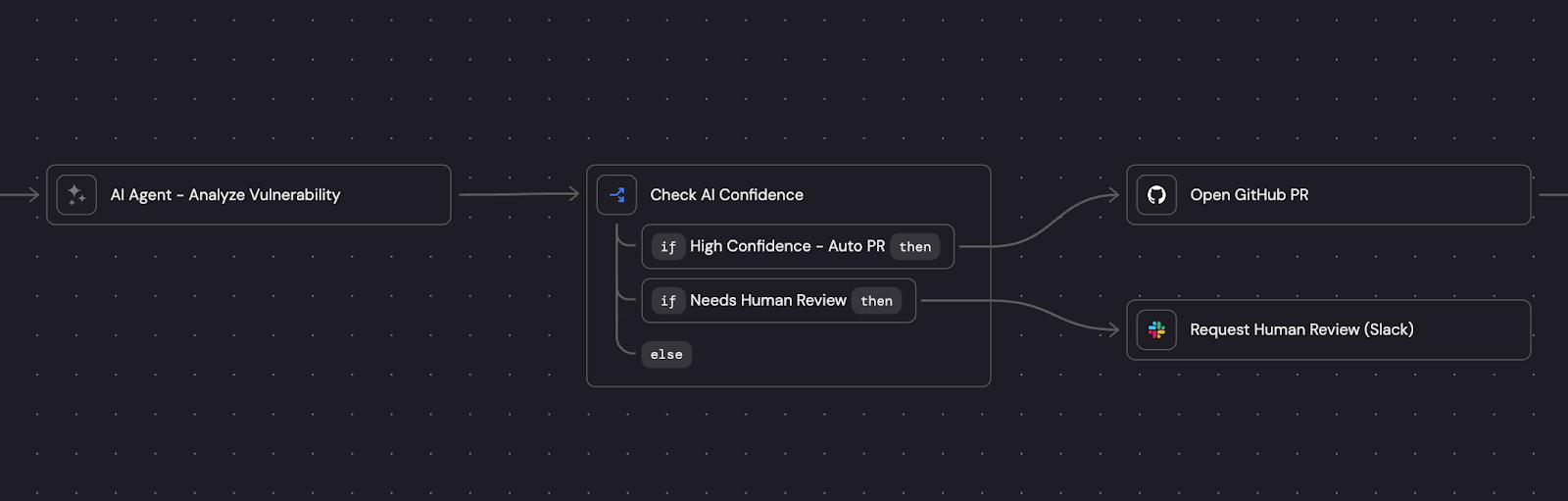

Security remediation. One organization connected their security scanner to an AI agent. The scanner finds a vulnerability, then creates a Jira ticket. That ticket triggers the agent, which pulls the relevant code, analyzes the vulnerability, generates a fix, and opens a pull request. The security team used to write the fixes - now they review PRs.

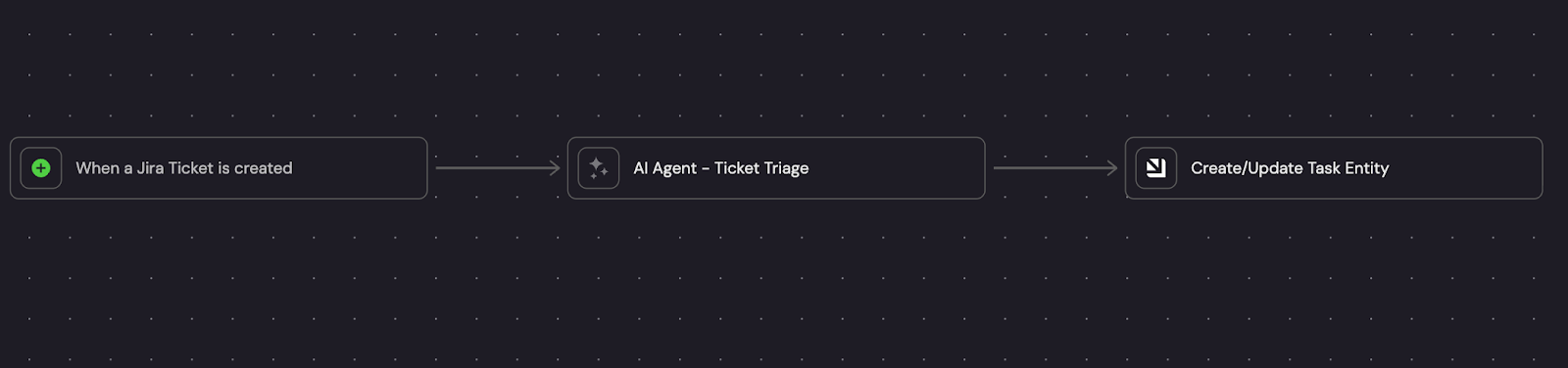

Ticket triage. Another organization built an agent that receives every incoming Jira ticket, reads the description, classifies it by type and severity, assigns it to the right team, and sets priority. 36 automated runs in December alone. Before this, a human did that routing manually for every ticket. Now a human only gets involved when the ticket is already classified, prioritized, and sitting in the right team's queue.

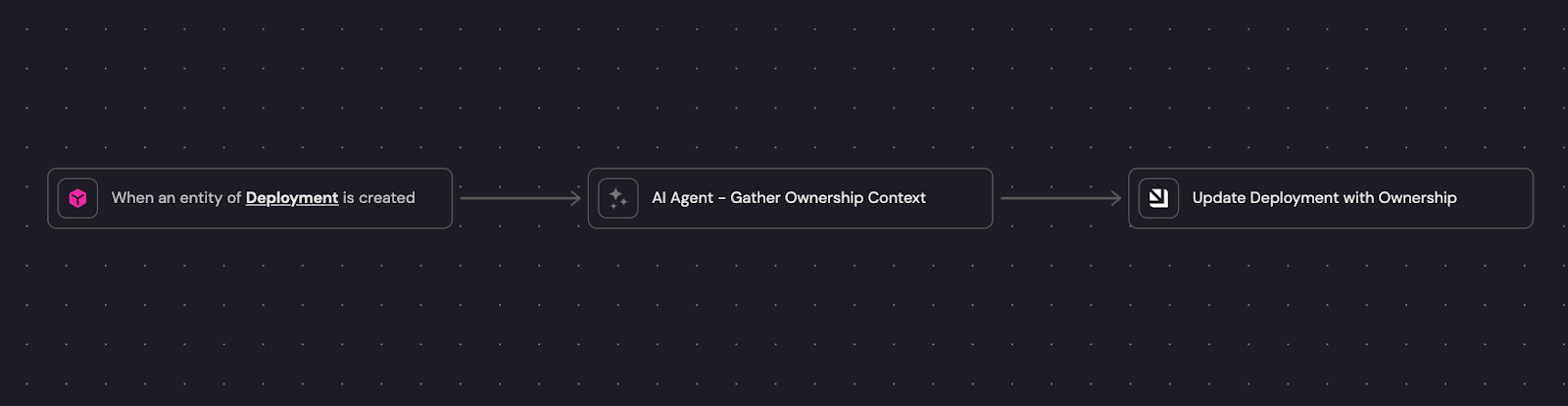

Catalog management. A customer built an event-driven agent that enriches ownership information on Port entities automatically when deployments, incidents, or new services are created. 72% of their total AI usage was machine-initiated.

Generally, we see two types of steps in workflows: steps that result in a text-based response (queries) and steps that result in an action being taken (actions).

Most steps today (73%) are query-based. An event fires, the AI gathers context, and posts a summary somewhere useful (RCAs, bug triage, service ownership). The remaining 27% take action - creating PRs, assigning tickets, or updating records.

I think we're about to see this numbers flip. The vast majority of AI usage will be for taking action rather than creating a block of text.

What we took from this

Six months from now, the teams building agentic workflows won't be the exception. Every team we studied started with simple questions and answers. But some iterated continuously on their portal and slowly trusted AI to do more than just answer questions. When they did, they unlocked the real shift: moving from manual engineering to autonomous engineering.

To see in more detail how leading teams are making this transition, have a look at our solution guides. They break down the exact patterns, architectures, and workflows behind becoming agentic in Port.

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

n8n + Port templates you can use today

walkthrough of ready-to-use workflows you can clone

Further reading:

Learn more about Port’s Backstage plugin

.png)