Measuring the real impact of AI on your SDLC

AI is reshaping the SDLC, but speed alone doesn’t equal impact. Learn why measuring AI adoption is critical to understanding productivity, cost, and real engineering outcomes.

Introduction

AI is rapidly spreading throughout the software development lifecycle (SDLC). What began as a handful of specialized tools has become a fundamental shift in how teams plan, write, review, and ship software. And at the center of this transformation lies a core question: how are AI tools impacting my organization?

Engineering teams want to move fast and effectively adopt AI. They want to eliminate toil, reduce friction, and focus on the parts of engineering that actually require creativity and problem-solving. AI is uniquely positioned to accelerate that momentum - drafting code, generating tests, summarizing diffs, and answering technical questions in seconds.

But many organizations are beginning to ask a deeper question: what does “fast” actually look like in the context of Human + AI software development? Is AI helping teams move meaningfully faster - or simply feel faster? According to Google’s 2025 DORA Report, software development professionals do feel as if they are moving faster. In fact, 80% report they believe AI has increased their productivity, with 65% stating they rely on AI at least a “moderate amount” at work.

To answer that, engineering leaders need more than anecdotes. They need clarity. Platform Engineers capture these measurements and expose this visibility. Engineering Leaders can then see exactly where AI accelerates work - and where it doesn’t.

With Port’s deep integration into your SDLC data with our Flexible Data Model and Context lake, we are uniquely positioned to shine a light on AI usage in your engineering organization. Let’s dig into why it is important to measure AI in your SDLC and how Port can help give you this visibility.

Perceived vs. real impact

AI often arrives in engineering orgs like a shiny new toy. Teams experiment with prompts, try new coding assistants, and sprinkle AI into tasks where it “might help.” And much of this experimentation creates a strong perception of impact: code appears faster, answers come quickly, and tests write themselves.

But a feeling of speed is not the same as actual improvement. According to Gartner’s How to Capture AI-Driven Productivity Gains report, 52.8% of developers report the impact of AI on team productivity to be 10% or less. Nonetheless, according to the same survey, engineering leaders have a healthy appetite for implementing AI to augment development workflows - 77% reported that their enterprises are either piloting or deploying AI code assistants.

Without measurement, it’s impossible to know whether AI is improving outcomes or simply adding noise. Engineering leaders need reliable indicators of value, to answer questions such as:

- Are cycle times actually decreasing?

- Are engineers shipping more, or simply iterating on AI-generated output?

- Which teams/individuals are leveraging AI the most in their workflows?

- Is software quality improving - or are issues being shifted further down the SDLC?

- Do PR reviews of AI-generated code take longer because of inconsistencies or subtle bugs?

- Have agentic workflows improved ticket resolution times?

When teams don’t measure the real impact of AI, adoption can drift into cargo-cult territory: lots of usage, lots of excitement, but limited proof of real value.

Engineering with or without AI is expensive

AI doesn’t just change how code is written - it changes how software is planned, reviewed, and shipped. The work shifts: from writing to reviewing, from debugging to prompting, from building to integrating.

These shifts can create meaningful gains, but they can also introduce new bottlenecks. AI brings speed, but it also introduces a new class of risks that aren’t always obvious at first glance. Risks such as: AI generated bugs, security risks, and tech debt are manageable if organizations track how AI is used. Organizations and engineering leaders need to be able to quantify where time is saved across the SDLC and how AI redistributes engineering effort. Human time is valuable, both in terms of user impact and economic cost.

Beyond human time, AI brings direct cost: token usage, licenses, infrastructure, security reviews, onboarding and enablement. Without a clear view into usage and value, AI quickly turns into one of two things: a black hole that’s an easy budget cut, or an uncontrolled cost center that grows without accountability.

.png)

To prevent falling into either of these two categories engineering leaders need strong ROI data. This data should support:

- Internal Justification - “We saved X engineer-hours per month”

- Vendor selection - “We know exactly individual model usage patterns”

- Strategic direction - “This part of the workflow should be human-led, AI-led, or hybrid”

AI can absolutely produce real impact - but only if the value is visible.

How Port can help you measure AI in your SDLC

As AI becomes embedded across the SDLC, engineering organizations face a dual responsibility: harness its acceleration while maintaining control, quality, and accountability. That balance is only possible with clear, continuous visibility into how AI is being used and where it’s delivering value.

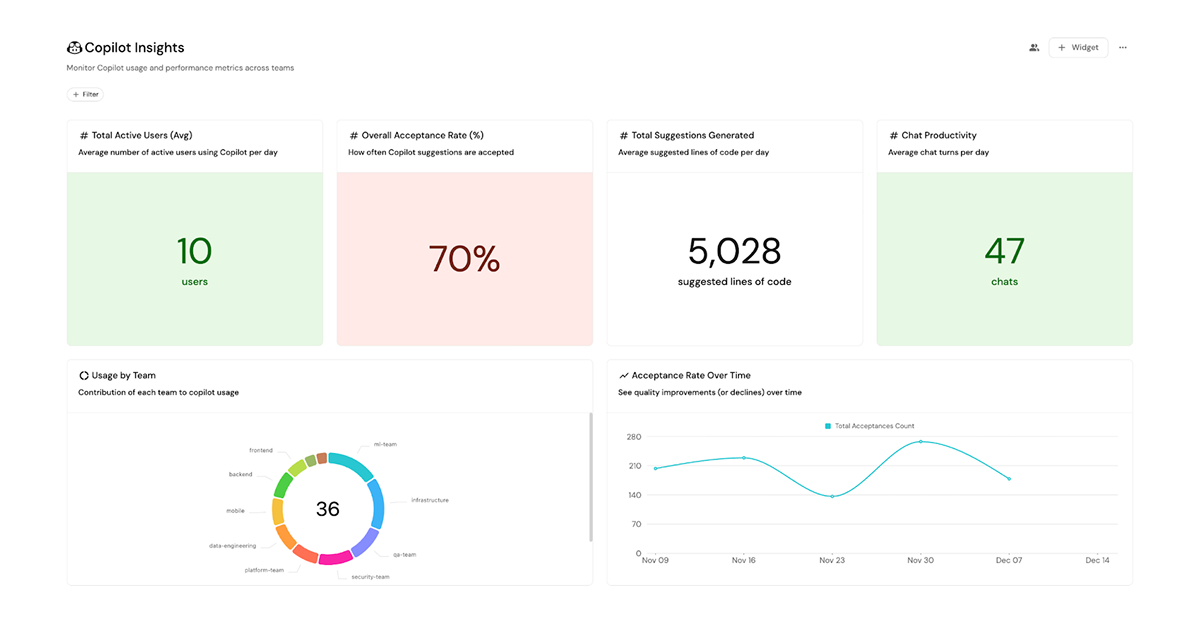

That’s why Port introduced native support for monitoring adoption and usage of AI developer tools - like Claude, Cursor, and Co-Pilot - directly from the Port software catalog. Through our integrations, platform engineering can build out metrics to improve AI adoption in the organization, give actionable insight and metrics dashboards for leadership so they can understand real cost drivers, and identify patterns or risks that emerge across their repositories and teams.

- Economic cost

- Acceptance/merge rate of AI-generated code

- Team-specific usage

- Compare PR cycle time with/without AI

- And much more

This allows engineering organizations to correlate how AI tools are impacting engineering outcomes.

Engineering Leaders can quantify ROI, optimize spend, and shape clear guidelines for when and how AI should support the development process. Most importantly, they gain the insight needed to scale AI safely and strategically - ensuring that teams truly move faster, without losing control. Beyond dashboards and metrics, Port’s recently released AI Assistant allows you to ask questions of AI usage (and other parts of your software delivery process) in natural language.

AI can transform engineering. With the right visibility, it can do so predictably, responsibly, and sustainably.

If you want to learn more about our integrations check out our integration guides:

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

n8n + Port templates you can use today

walkthrough of ready-to-use workflows you can clone

Further reading:

Learn more about Port’s Backstage plugin

.png)