Effective AI adoption practices: A guide to measuring AI success

Learn how to measure AI success and prevent agentic chaos. Discover Port’s proven framework for governed, measurable AI adoption across the SDLC.

AI adoption is accelerating, but without the proper guardrails in place, teams risk entering a new era of agentic chaos: multiple, discrete autonomous agents operating without alignment or governance. Instead of enhancing productivity, this ungoverned autonomy fragments workflows, duplicates effort, and undermines trust.

At Port, we’ve seen this evolution firsthand. The shift from DevOps to platform engineering, and manual to agentic engineering — where AI agents collaborate with humans across the SDLC — brings incredible potential for acceleration, but also unprecedented complexity.

So how do platform teams, engineering leaders, and developers adopt AI safely and effectively, transforming agentic chaos into measurable business value? We'll review the reasons it's important to set and track goals for your AI adoption strategy, gauge impact, and adjust your strategy as challenges evolve.

From engineering chaos to agentic chaos

By distributing ownership across the SDLC, the DevOps evolution promised agility, but often created engineering chaos. Engineers became responsible for everything, from deployments to observability, which created tool sprawl, inconsistent standards, and unclear ownership. Producing software became more complex and chaotic.

AI can help address this, but it wasn’t purpose-built to fix it. Like DevOps, AI also promises speed and agility, yet often makes things worse when introduced without the right context or guardrails. Instead of reducing friction, uncoordinated AI adoption adds new layers of complexity, autonomy, and risk — turning the promise of acceleration into agentic chaos.

Without guardrails, AI can multiply faster than governance can keep up. Port’s Agentic Engineering Platform was built to address this exact problem, bringing order to the autonomous era with context, governance, and measurable outcomes.

Why measurement matters

Measurement is an antidote to agentic chaos. Without it, AI adoption becomes a matter of guesswork: there are no baselines, no proof of ROI, and no alignment with business outcomes. According to Microsoft’s findings in the AI Data Drop research paper, just 11 minutes of daily time saved is the tipping point where users begin to feel real productivity benefits from AI.

- Visibility for leadership: Engineering leaders fund what they can measure — improvements in metrics like MTTR, lead time for changes, change failure rate, and deployment frequency prove success.

- Clarity for platform teams: Measurement turns “AI experiments” into operational intelligence.

- Trust for developers: When AI performance and impact are transparent, developers see real value instead of overhead.

In Port’s platform, this principle underpins every agentic workflow, scorecards, dashboards, and feedback loops, to make AI adoption data-driven and trustworthy.

Principles of effective AI adoption

Successful AI adoption requires context, governance, auditability, and deliberate planning — the same ingredients that make any engineering system scalable and safe. These elements give AI what it needs to operate effectively:

- The context to understand your ecosystem

- Controlled access to only the data and actions it should use

- Mechanisms to learn and improve its output over time

- Clear, governed pathways through the SDLC

Without this foundation, AI acts blindly — powerfully, but disconnected — and quickly becomes another source of chaos instead of a force for acceleration.

1. Start with strategy, not tools

Adopt AI where it aligns with business and engineering outcomes. Focus on throughput, stability, and developer satisfaction — not just automation volume. If teams have already started adopting AI tools, ask them what’s working and see how you can make those workflows reliable.

At Port, customers who successfully adopted AI began by defining clear use cases and applying AI in problem areas. Then, they tracked ROI metrics tied to engineering performance — specifically, deployment frequency and change lead time — before automating anything, thanks to clear standards and frameworks.

2. Think in Day 0 → Day 2 terms

AI adoption isn’t a one-day rollout; it’s part of a larger shift in your engineering lifecycle. Consider where and how AI can help in these scenarios:

- Day 0: Designing blueprints, security policies, and access rules

- Day 1: Deploying automations and defining where AI acts vs. where humans act

- Day 2: Monitoring agent reliability, cost impact, and success

This Day 0–Day 2 mindset ensures AI remains resilient and governed long after its initial deployment. It also helps you think more holistically about AI applications, as opposed to thinking of AI as just a coding or chat assistant.

3. Balance autonomy and guardrails

Try striking a balance between autonomy and governance, while keeping humans in the loop. Platform engineers should work with their developers to decide what to build and define how AI should comply. AI can then be left to handle certain design and execution decisions within those boundaries. Every action, recommendation, or change proposed by AI flows through human approval, ensuring that autonomy never replaces accountability: it amplifies it.

Effective guardrails include:

- Role-based access control (RBAC) for actions and data

- Time to Live (TTL) policies and approvals for sensitive automations

- Context grounding in the software catalog to prevent rogue actions

- Scorecards that check code’s compliance with appropriate standards

This empowers developers while keeping AI operations secure and auditable, a key design principle behind Port’s agentic workflows.

Practice 1: Define adoption goals and metrics

Before implementing AI, understand your current process and then define what future success should look like. Without a baseline, there’s no measurable “after.”

Track:

- Deployment frequency

- Lead time for changes

- Mean Time to Recovery (MTTR)

- % of AI-assisted tasks completed over a given period of time, usually the length of your typical sprint

Xceptor, a Port customer in financial automation, cut release times from a full day to just one minute by aligning automation with business goals, achieving 24x faster releases and a 46% reduction in delivery costs.

Practice 2: Embed AI in the platform, not at the edges

The most successful AI implementations are embedded into developer workflows, rather than isolated as add-on tools. Developers will know best where AI could help and harm — make sure you incorporate their ideas in your implementations.

In a survey or planning meeting, ask your developers:

- Where is toil highest?

- Where do developers context-switch most often?

- What slows down delivery? Is it consistent?

Then integrate AI where it naturally fits based on your survey findings. Examples include:

- Automated root cause analysis (RCA) suggestions during incidents

- Automate PR review process, automatically assign AI-assisted review

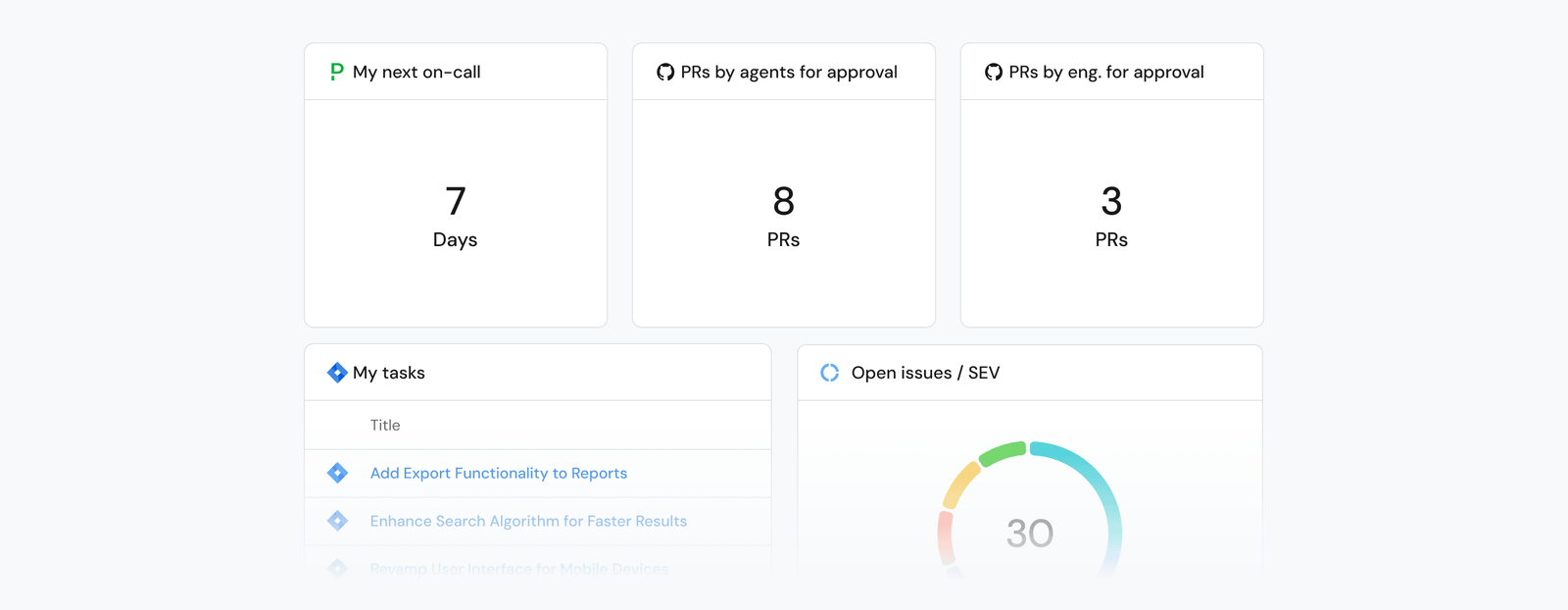

- Daily “Plan My Day” summaries in developer dashboards

A large e-commerce enterprise centralized their migration progress tracking in Port, providing both developers and managers with real-time visibility and eliminating the need for manual updates.

Treat adoption as an experiment: compare AI-enhanced workflows against traditional ones. Baselines and control groups make ROI measurable and credible.

{{survey}}

Practice 3: Establish standards and governance early

AI sprawl and agentic chaos are inevitable without structure.

To prevent chaos, scope out your governance infrastructure build and include it in your adoption framework:

- Blueprints: Define how agents interact with data and systems

- Policies: Standardize quality, security, and access

- Scorecards: Continuously track adoption maturity

Libertex, a financial organization, implemented Port’s maturity benchmarks and scorecards to unify documentation standards across teams, boosting reliability and supportability of its own platform.

This approach mirrors how Port governs AI agents: each agent operates within structured, measurable workflows that are directly tied to engineering outcomes.

Practice 4: Prioritize developer experience

Developers adopt AI when it feels like freedom, not friction. But this is true of most tools and technological advancements, not just AI.

To create developer-first AI:

- Enable self-service provisioning of environments and common prompts

- Offer personalized dashboards with metrics that matter

- Provide context-aware AI assistants that answer questions directly in Slack, IDEs, or the portal itself

A global aerospace customer, Space International, using Port reduced developers’ context switching by more than 2 hours per week, achieving 70% adoption in the first month.

As Port’s agentic engineering vision emphasizes, developers thrive when the portal becomes the control center of their day, seamlessly connecting agents, data, and workflows.

Practice 5: Treat AI as an evolving infrastructure technology

AI systems evolve quickly and will continue to do so. The key to staying current is building a culture of continuous learning, measurement, and iteration.

- Track agent reliability, cost, and adoption sentiment

- Combine hard metrics (such as DORA) with soft signals (surveys, satisfaction)

- Display insights on leadership dashboards for transparency

This feedback loop turns adoption into continuous improvement, the same principles that fuel Port’s Engineering Intelligence and Context Lake, where all operational data feeds back into more intelligent automation and governance.

How Port helps teams adopt and measure AI effectively

Port isn’t just another AI tool.It’s the only Agentic Engineering Platform built to bring order to the autonomous era of software development. While most AI tools focus on getting code to production, Port focuses on creating lifecycles for software: connected, measurable, and reversible paths that are durable and evolve with your systems and teams.

With Port, you can move from engineering chaos to agentic development, where AI operates safely, visibly, and in service of real engineering outcomes. Port brings you:

- Built-in governance: Every agent, workflow, and action is governed by blueprints, RBAC, and audit trails, ensuring AI acts only within approved contexts.

- Workflow-first design: Port connects AI directly to real developer tasks — deployments, incident management, change requests — making adoption part of everyday engineering.

- Lifecycle visibility: Dashboards, scorecards, and surveys capture both technical and human signals, turning agent behavior, adoption, and ROI into clear, measurable insights.

- Continuous evolution: Neither AI nor Port is static — both learn from your ecosystem, adapt to new patterns, and operate within systems that are designed for iteration and improvement.

In short, Port gives platform and engineering teams everything they need to run AI as part of the software lifecycle, not as a side experiment. It’s how teams transform ungoverned autonomy into measurable, governed acceleration, and how organizations graduate from chaos to true agentic engineering.

AI adoption is only as good as what you measure

AI doesn’t eliminate chaos by itself. Without measurement, it creates more of it.

With the proper practices — strategy, governance, and a developer experience focus — AI becomes a force multiplier for engineering efficiency.

To adopt AI effectively:

- Define baselines;

- Start small, measure, iterate;

- Align outcomes with engineering metrics;

- Scale safely with governance and visibility.

{{cta_5}}

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

n8n + Port templates you can use today

walkthrough of ready-to-use workflows you can clone

Further reading:

Learn more about Port’s Backstage plugin