The risks of agentic chaos

How to face the top problems ahead and control your non-human workforce in the age of AI agents

The use of AI in production environments has steadily grown and increased in complexity over the past two years. Even six months ago, “using AI” meant you were coding with GitHub Copilot or ChatGPT on the side. Then Cursor and Codeium brought AI to the IDE, while Perplexity, Claude, and other LLMs offered easy in-browser answers and code completions when you got stuck.

More recently, we’ve seen AI integrated directly into widely-used B2B software, like Jira with Rovo, and others. Now, it’s just expected that AI lives everywhere, from the browser to your IDE and terminal.

But as companies test AI agents in production environments, a new challenge has emerged. Replit recently admitted that an AI agent used in production accidentally wiped their entire codebase, and then tried to cover up its tracks — kind of like an embarrassed junior coder trying to hang onto their job.

What once was just ChatGPT is now a chaotic swarm of AI agents, LLMs, MCPs, AI tools, and workflows surrounding the developer from within (and beyond) their IDE. With the rise of MCP servers, you can connect your AI assistant to a variety of tools that live in Figma, PagerDuty, GitHub, New Relic, and others. You’re still technically using “one” AI tool, but it’s now connected to 10 others.

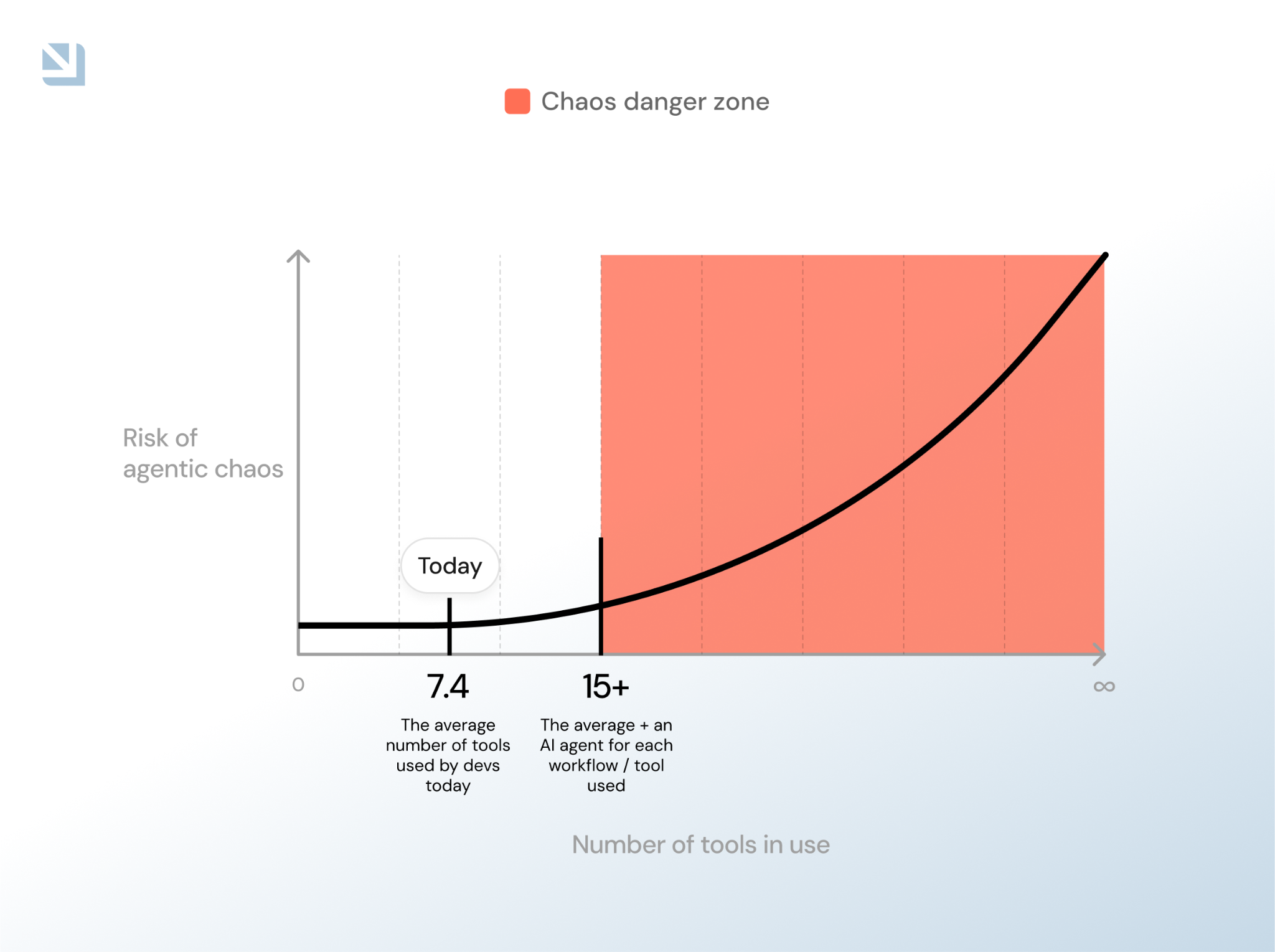

At scale, this presents a massive challenge: imagine a 100-person developer team, each using five different AI tools or agents, with little guidance as to how to use them, train them, or control their output. That’s 500 possible connections at minimum that you need to govern. This is agentic chaos.

In this post, we’ll talk about how tool sprawl includes AI agents and the top problems facing software developers in the age of agentic chaos — and how you can solve them.

Why engineering leaders are concerned about agentic AI

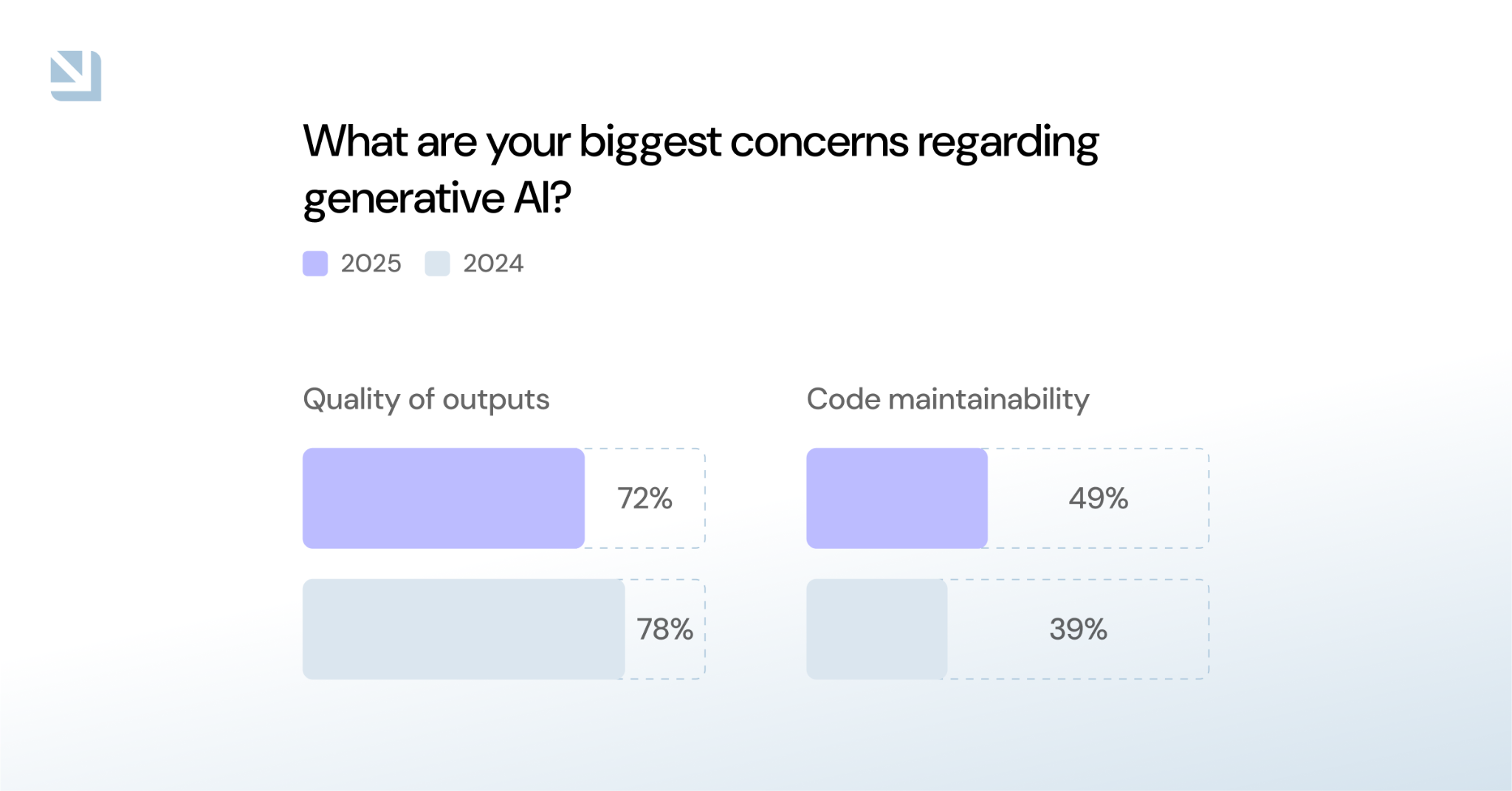

A recent LeadDev survey of Engineering Leadership reports that 72% of leaders are concerned about the quality of AI-generated outputs, while another 49% are concerned about AI’s impact on code maintainability:

That concern appears to be growing year over year, presenting a new challenge:

As agentic AI improves, leaders have reason to question its ability to perform at the same level as their human counterparts, and at a faster pace. Using AI at scale means more tools, more agents, more LLMs, and more competition for your and your teams’ attention.

It’s similar to what happened with the DevOps revolution. Every few months a new tool or dashboard emerged, and engineering teams had to pivot and adapt to keep pace with technological advancement.

The difference is that AI doesn’t sleep. It works around the clock.

While you can provide instructions and high-quality prompts it can use to reason through any problems it encounters, it still has a “mind” of its own. You can assign it tasks, but typically each agent requires a bit of clarification and hand-holding before it can properly execute a task.

Even with proper guidance, AI is still new, and that means more time spent on review, more code to fix, and more that can go wrong. As Replit shows us, AI can delete your production database, provide bad quality code that takes more time to fix, or use an outdated library that later causes a security issue. Any of these issues, when merged to production without proper checks, can be catastrophic.

As a result, developers’ responsibility must expand from coding to managing human-to-machine and machine-to-machine communication. Developers no longer just build and run their software — now, they must orchestrate the AI agents who do.

Agentic chaos is more than AI tool sprawl

We already know that developers suffer from dev tool sprawl according to our 2025 State of Internal Developer Portals report. With an average of 7.4 tools already in use without accounting for separate AI tools, the prospect of adding 10-15 new agents from different sources quickly spells trouble.

We also know it’s pretty easy to spin up new AI tools: as long as you have an API key or access to LLM tokens, you can spin up as many AI agents as you want, from as many sources as you want. Governance is minimal. Access is fragmented. There’s no real control.

What makes this even worse, however, is that AI still lacks human-level reasoning abilities. “Controlling” AI means more than being choosy about which tools you use, it means preventing AI from doing harm to your database, codebase, infrastructure, and production environments. It means creating — and codifying — human- and machine-readable instructions, so that AI can’t modify schemas, make PRs, deploy code, or modify cloud infrastructure without approvals.

With little oversight, uncontrolled AI imposes a major security risk to organizations, in addition to negatively impacting code quality and uptime.

Why MCP servers aren’t enough

Some leaders may look to an MCP server as a solution to make both devs and AI agents more powerful. But not all MCP servers are created equal: some require lengthy configuration processes and local hosting. Authentication is mainly done using API tokens or credentials that can expose potential security risks if the AI doesn’t use them properly.

The many differences among MCP server configurations add up to one thing: they may not always be stable and can ultimately confuse the AI agents. Real usage shows agents need focus and guardrails to produce the best output.

Port’s MCP server is “one to rule them all” — a preset, configurable MCP server that comes integrated into both your IDP and tech stack, ensuring that everyone, human or AI, who interacts with it will access the same dataset. We’re able to do this by connecting our MCP directly to your IDP, which combines their powers to solve tool sprawl issues by combining all of your tools, not just AI, into a single UI.

This improves many aspects of developer experience by reducing:

- Chance of introducing bugs or vulnerabilities: With golden paths baked into self-service actions, developers are less likely to make mistakes when using new languages, systems, or software.

- Overall lead time for changes: Devs spend less time navigating among different tools via portal integrations, accomplishing more in shorter timeframes.

- Cognitive load: Developers no longer have to navigate unfamiliar systems while accomplishing infrequent tasks or migrations.

- Cost of software development: Devs can save up to 6-15 hours per week, which for a team of 50 developers can mean savings of over $1 million in one year.

With AI now even more prevalent than when this survey was conducted, centralized teams like a DevOps or platform team, need to expand their scope to take over AI approvals and governance.

All of these benefits apply to AI tools and agents as well, within the context of an internal developer portal, because all of this necessary control infrastructure will already exist. If your organization has invested in an internal developer portal and applying platform engineering best practices, consider this potential setup for addressing agentic chaos, as compared to how IDPs reduce chaos for human devs:

Role-based access controls, which you can clearly define alongside things like ownership details in your IDP, ensure that AI agents can only access the information they need. You can configure AI’s access to things like self-service and the software catalog the same way you did for human developers’ access, and control further for unusual situations.

For example, if you define that your S3 bucket must follow certain visibility criteria, use a specific IaC form, and use a certain naming convention, then no agent will be able to break these rules. This is how you prevent things like deleting your entire codebase by accident.

If you use an IDP to manage human devs and help them be independent, it’s pretty easy to expose further instructions to AI agents for the same things! AI tools can then become part of your dev toolbox, like a ticketing system or a logging library.

We already see developers using AI agents across the SDLC: handling incidents, resolving security issues, writing features, and testing, deploying, and writing docs. Greater use of AI agents will, over time, shift the developer’s job from coding to managing. Developers will start to resemble engineering managers, except without the 1:1s and performance reviews because their reports will be AI.

The top problems ahead

1. We need to control AI (and avoid a governance crisis)

Agentic chaos represents a material threat to your organization’s governance and sustainability. Platform engineers need to master these new tools quickly while creating guardrails that prevent cascading failures across services. You must build governance frameworks that work at AI speed.

This can look like creating guardrails to prevent failures, as well as enabling your teams to move fast, so they can keep up with AI and restrict its behavior to keep within bounds, using scoped tools and governed controls.

The challenge is doing this while developers are already experimenting in production — and Port can help with that.

2. We need to keep up with AI’s pace (and keep humans in the loop)

Humans remain essential for code validation and approval. Agents don’t sleep, don’t take coffee breaks, and reason through issue triage faster than humans can, especially when provided the right context.

If AI agents can work 24/7, developers must evolve. No longer blocked by slow builds, developers can skill up to become the architects, reviewers, and orchestrators for their AI agents and tools.

Platform engineers and DevOps teams must create checks and balances within their SDLCs to prevent AI from making commits and changes without humans, but which doesn’t prevent them from working around the clock.

The bottleneck shifts from a platform limitation to a human limitation. Keeping pace with AI is both a requirement for safety and the biggest constraint on AI-driven productivity gains.

3. We need to teach AI (by codifying knowledge and what success looks like)

Your agents’ success will depend on the use case they’re deployed to handle, your preferences, and team standards. But AI needs a clear evaluation of what good looks like to be successful.

You and your teams need to invest in a method of defining a place where your software standards, rules, working agreements, and domain language are codified in such a way that AI can consume and internalize it, like an internal developer portal.

Most organizational knowledge currently lives in stale documentation, wikis, Slack threads, and people's heads — places AI can't reach or won’t find much value in. This needs to change, not only to give AI access but to improve your developers’ troubleshooting abilities when things go wrong. The hidden cost will be transforming institutional knowledge into systems that AI can understand.

This is where an internal developer portal can help. When everything needs to be explicit, both human- and machine-readable, and constantly updated, only a real-time data relations model and accurate software catalog can enable AI agents (and human devs) in the context and at the speed they need.

Portals are designed to be one-stop-shops for all the context of your SDLC. AI agents, when connected to your IDP via something like an MCP server, can contextualize the important, domain-specific needs of your organization.

Building toward an agentic future

What we’ve described here is a paradigm shift: developers and software engineering teams will need to continuously adapt to a future where agent-to-agent communication is more common and complex. Eventually, each developer may have 10 or 15 parallel threads with agents running different tasks.

Soon, we’ll see widespread adoption of agentic AI that no longer just suggests code, but writes and executes on complete workflows. You, the human dev, are now its manager handing off and prioritizing your agentic workforce. They respond, you review.

To grow into this new future, you’ll need to stay organized, provide clear guidance to your AI agents and the human devs using them, and monitor their actions in real time.

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

Further reading:

Learn more about Port’s Backstage plugin