.png)

If you’re a developer, your day probably looks something like this: You start by figuring out what to work on, pulling from tickets, requests, team meetings, or manager instructions. Once you’ve sorted your priorities, you start building, usually alone in the IDE, sometimes with the help of a basic AI coding assistant.

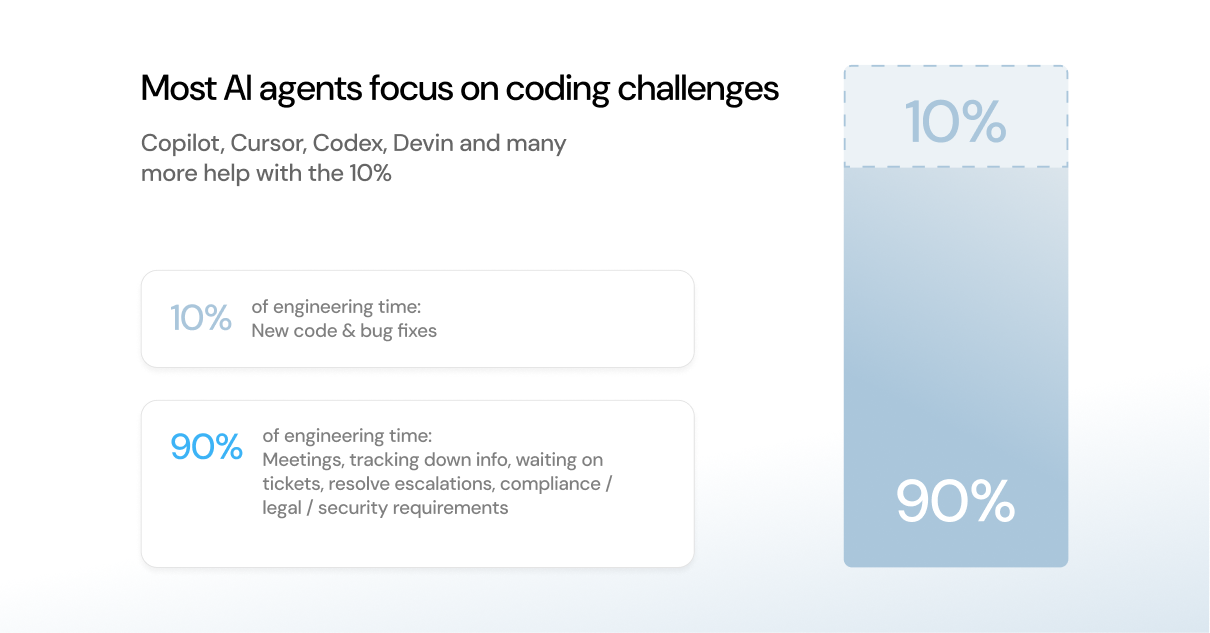

Code review happens in collaboration with your peers once code is ready for feedback, testing, and deployment. But according to Gartner, this can take up as little as 10% of the average developer’s time:

The other 90% of your time is spent in meetings, tracking down information, troubleshooting, prioritizing or submitting tickets, and other non-coding tasks. Atlassian backs up this finding in their 2024 State of Developer Experience Report, where they found that 97% of developers lose time to inefficiencies, tech debt, and insufficient documentation. The same report states that up to 70% of developers lose a full working day per week to these issues!

So, you may be asking, why is most of the focus on using AI to write code?

What the AI-native future holds for developers

In the near future, AI will be present throughout the entire software development life cycle (SDLC). And in this future, your role as a developer shifts. Not toward coding, but toward engineering and orchestrating.

If an incident happens today, your on-call developers are responsible for investigating the root cause of an issue, writing a root-cause analysis report, and documenting a remediation plan. Your MTTR might be a day at best, or a few at worst, but all in all pretty standard.

But with AI agents, incidents become almost self-healing. Your AI agents are the ones investigating and documenting an issue’s root cause. All you have to do is review the generated RCA and choose how to direct your agent from a list of three suggested options, with their pros and cons included. Your MTTR shrinks to minutes.

Now, imagine you can simultaneously resolve three incidents, fix two bugs, and ship a new feature, all using different AI agents that run in parallel and report back to you with their progress.

Your cognitive load shifts from “how should I handle this task?” to “what can I assign to an agent?” Eventually, that will turn from “did my agents do what I expected?” into, “how do I help my agents do their jobs better?”

With an autonomous SDLC, you might start your day by asking an AI assistant what to focus on — or asking it to send you a daily digest of priorities.

Your job becomes less about writing code (which shrinks from 10% to maybe 5% of your time) and more about architecture, standards, and governing the AI that’s coding for you. You’re now less of a builder and more an architect or conductor, similar to a line manager on a factory floor. Your job becomes making sure the agents are on track, unblocking them, spotting edge cases, and managing quality.

This is a major transformation: developers go from being builders to orchestrators. But with this efficiency, you also become the slowest part of the SDLC, reviewing, approving, and course-correcting AI outputs. Work happens faster now overall, and your job demands entirely different skills.

Avoid becoming the bottleneck

As AI speeds up your SDLC, you don’t want to become the bottleneck between your team of agents and production. But you will need to make sure your agents aren’t deploying bad code.

Part of the SDLC transformation is changing developers from coders to AI managers. This includes planning the architecture of your product, your coding standards, and writing or maintaining documentation for your AI agents to follow in development. Your software architecture skills will be used much more frequently than your hands-on coding skills.

Soon, your day starts to look more like that of a team lead:

In a recent episode of Lenny’s Podcast, Cognition Labs' CEO described their average developer working alongside five AI agents, which shifts their focus onto orchestration and planning.

But AI will soon also have a role in planning. AI shines especially when it’s given the right context.

Imagine a platform, like an internal developer portal, that integrates with your entire tech stack and shows your personal tasks, team goals, company OKRs, past escalations, open pull requests, overdue tickets, and ad-hoc requests from support or sales all at once.

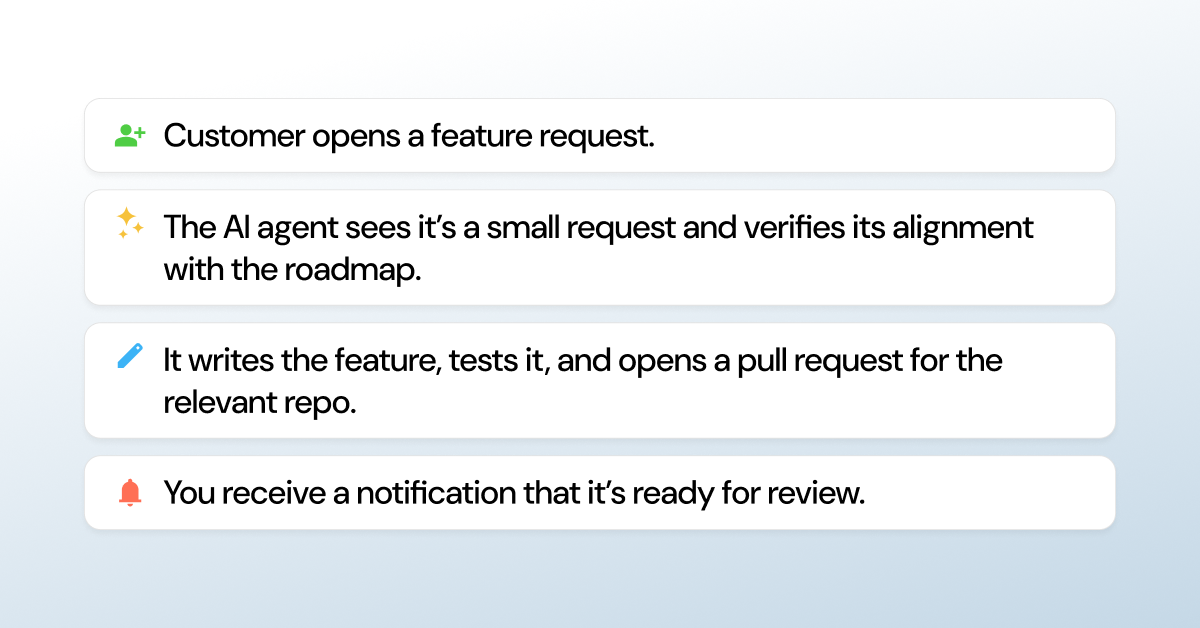

Your AI agent will likely already be focused on building your product, which means you can focus on prioritizing changes and managing code quality. Imagine this flow:

The cost of developing the feature is relatively low, so you only need to decide whether to accept it or not.

A new cognitive overload challenge

For this workflow to scale, the AI needs more than just code context. It has to understand your company’s ubiquitous language, as Eric Evans explained in his book on domain-driven design. This is often referred to as the business context.

Among other things, your business context includes your company’s unique domains, sub-domains, and terms that have special meaning, like “team,” “customer,” “service,” “revenue,” or even “a small feature request.” AI needs access to both your business context and your engineering context, which requires instructions, data access, and alignment with internal heuristics — as well as a way to keep these materials updated in real-time, to match the speed at which AI can solve problems.

Port can serve as a repository for both your business context and engineering context. But when you’re not operating with a portal, this business context can carry different meanings, not just across companies but even between departments and teams:

This context works best when it is accessible to everyone, especially when you’re orchestrating multiple AI agents. Consider a situation where you, a human developer, need to jump in and reprioritize the AI’s work (or understand how it prioritized its own work). You can use Port to determine which incidents are impacting your key strategic customers and check their status in real time.

This is where developers and team leads shine in this new era: while AI produces code and creates new features quickly, developers remain in charge of deployment frequency, schedules, and quality thresholds. You remain in control of what code is pushed to production, when new features are released, and how the AI is trained and maintained as a tool itself.

If a feature is produced and the code isn’t correct, you can kick the feature request back to development and request the AI resubmit a new file. If it is correct, but not relevant to other, larger or more important projects, it can be slated for a later release when other or more related features are ready to be deployed.

{{cta_1}}

How Port plans to meet these challenges

At Port, we’re less interested in speeding up the 10% of your time you spend coding. Rather, we want to reduce your cognitive load during the other 90% of your time.

Here’s how we see it: Say a code scanning tool flags a vulnerability. One of your AI agents opens a PR to fix it, but needs to create a dev environment to test the code. It can use self-service actions to request an environment with a limited TTL and run tests independently, the same way a human developer would in the portal.

Port can help you manage your AI agents and scale them, provide them more controlled autonomy, and grant them deeper, governed access into your platform than MCP can provide. This means not just the repo or IDE, but production, cloud infra, deployment systems, documentation, and everything else downstream becomes available to AI agents and can be provisioned as you see fit.

Your AI agent can then notify SecOps (or that person’s own AI assistant) that there is code ready for review. If it’s approved, which could very well be over the weekend when there are minimal active users, the agent might go ahead and deploy it. If the deployment fails and logs suggest an issue, it could restart the pod or page the DevOps agent.

Synchronicities like these with tool standardization make it much easier to predict the outcomes of your agents’ work. When it comes to planning, designing, and reviewing the AI agents' work, having everything in your portal is crucial, including all the necessary context, keeps engineers, human or machine, in a flow state.

All of this is still not about writing code; rather, it’s about operating in the broader environment. The real win is in how AI agents interact with infrastructure, tools, governance, and people.

In the next few months, we'll be releasing some exciting new features to power this future. Subscribe to our newsletter to stay tuned for new updates.

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

Further reading:

Learn more about Port’s Backstage plugin