AI + Engineering intelligence: Measuring agentic impact and ROI

Learn more about how to adjust your measurement strategies in the AI era with Port.

DORA metrics first emerged as a means of quantifying the impact DevOps teams had on throughput and stability. At the time, developer teams comprised several humans engaged in the same or similar tasks of coding, testing, and pushing releases to production. Now, with AI agents and workflows on the precipice of mainstream adoption, we can clearly see that things have changed since the original framework came out in 2013.

Port has always embraced DORA metrics as a means of improving developer experience and efficiency. But the old DORA can’t paint the entire picture of AI’s impact, primarily because where and how AI is implemented matters a great deal to its success.

We knew we needed new metrics for AI success that went beyond the traditional. The 2025 DORA State of AI-assisted Software Development Report proved our point further when it was released in September.

We’ll take a look at the DORA AI Capabilities Model and offer our own insights and recommendations for how to measure AI impact, efficiency, and ROI in this post.

What is the DORA AI Capabilities Model?

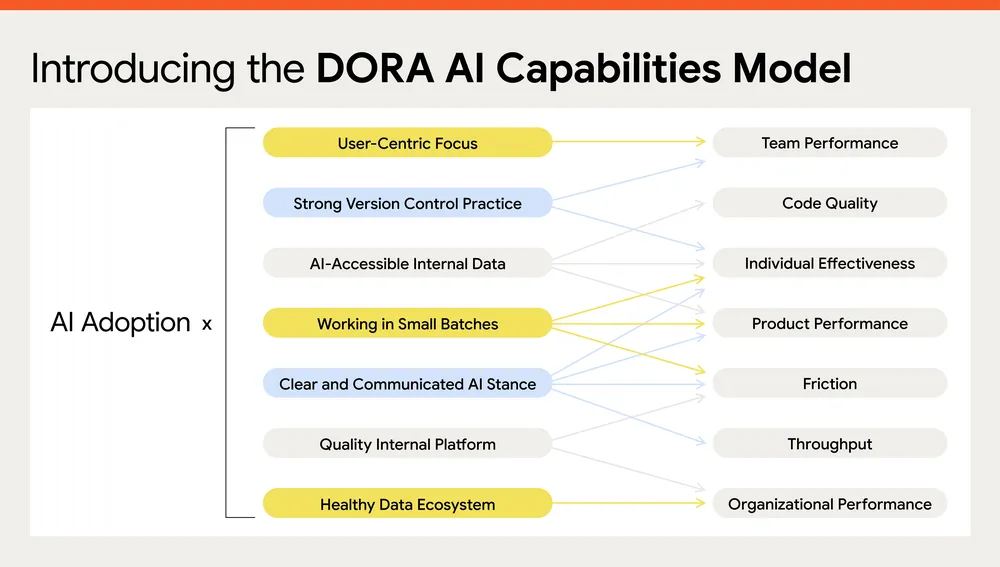

The DORA AI Capabilities Model is a set of seven factors that “help amplify the benefits of AI adoption,” per the report’s authors. These seven capabilities are:

- A user-centric focus

- Strong version control practices

- AI-accessible internal data

- Working in small batches

- A clear and communicated AI stance

- Quality internal platforms

- Healthy data ecosystems

These are clearly quite different, more qualitative points of consideration than hard and fast metrics. But there are many ways to tie metrics to each of these capabilities, even above and beyond the core DORA metrics.

Strong version control practices, for example, can be enforced and quantified using scorecards, a key pillar of internal developer portals (IDPs). Working in small batches makes it significantly easier to monitor your deployment frequency and change failure rate, two key DORA metrics that measure throughput.

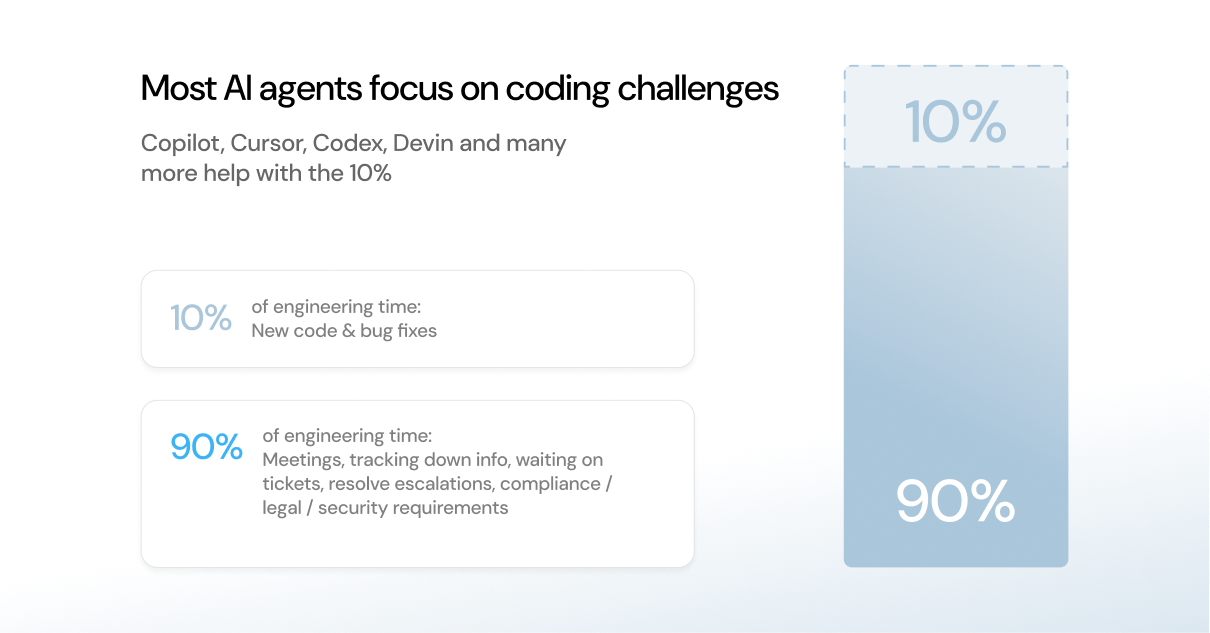

The other thing you may notice here is that nearly none of these capability factors involves much coding. Lines of code (LoC) used to be the ultimate signifier of productivity, but what does that matter, when developers are spending only 10 percent of their time writing code?

If AI tools are left only to write code — without access to your systems, engineering context, or domain knowledge — their benefits will remain limited and constrained just to coding problems. At Port, we embrace the idea that AI needs to be deeply integrated into your software development lifecycle (SDLC), not just a coding and chat assistant.

The main takeaway we had from this year's DORA report is that, without the right context, permissions, guardrails, guidelines, and human involvement throughout the process, AI can’t reach its true transformative potential. Five of the seven capabilities point to a need for strong systems, engineering platforms, and domain-integrated context provided in an AI-accessible way.

How Port delivers agentic engineering at scale

Port is specifically designed to help you fully embed and integrate AI agents and agentic workflows into your software development process. As first-class users of the platform, agents benefit from Port in the following ways:

Two essential categories for measuring AI adoption

As the DORA Report for this year indicates, engineering metrics need to evolve alongside AI agents and infrastructure changes that emerge to support them. This is the primary reason we launched our Agentic Engineering Platform (AEP) at Port.

After working with dozens of strategic customers to develop the AEP, we maintain that DORA metrics offer a clear framework for measuring throughput and stability. But in addition to those metrics, we recommend adding two more to effectively measure AI’s impact:

- Execution time (how long it takes to complete a single task)

- Time-to-market (how fast value reaches production, in terms of features released)

While these are mostly related to throughput, your stability index should not change when you introduce AI to processes.

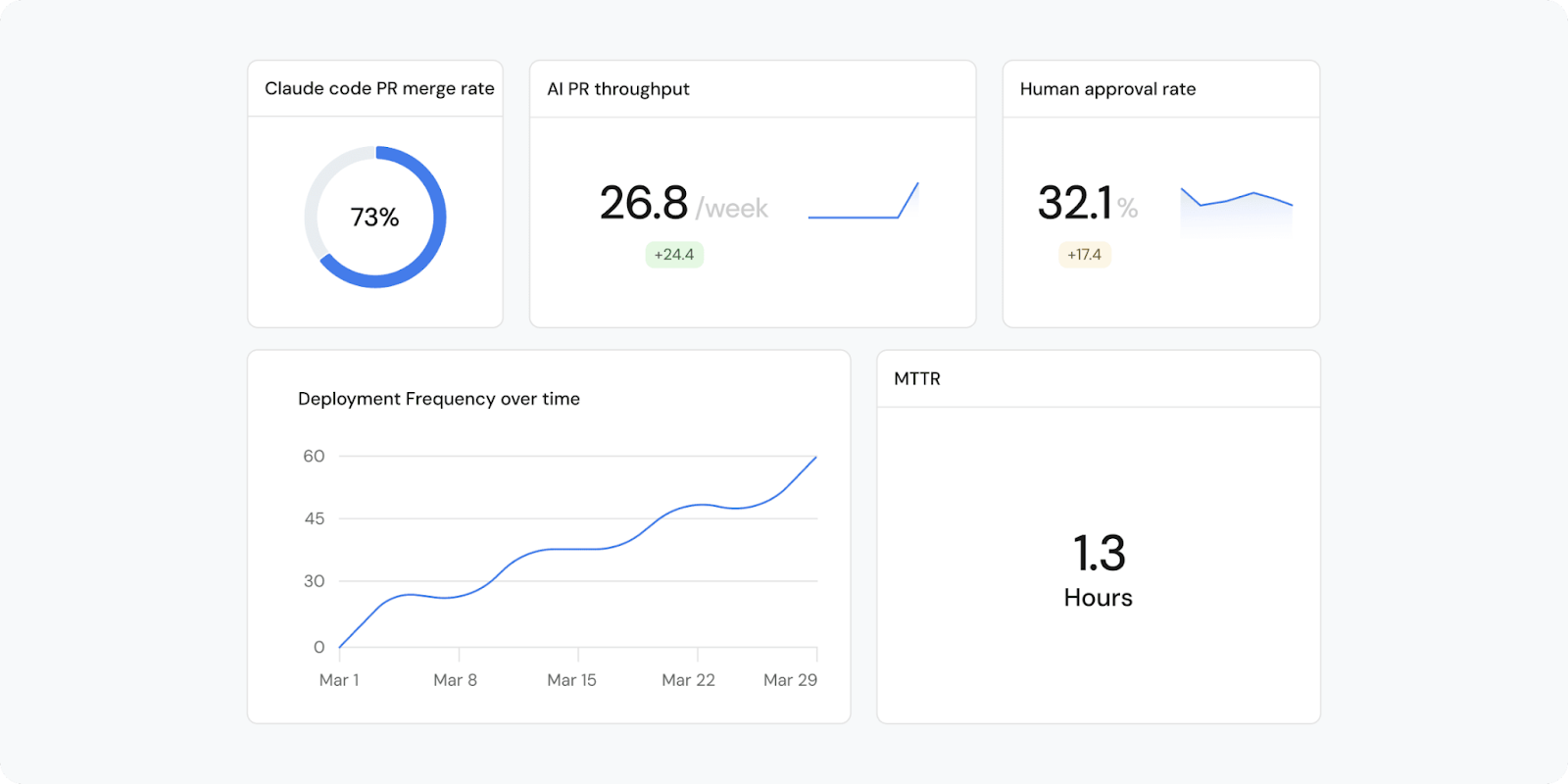

Effective AI implementations and agentic engineering should reduce execution time and time-to-market. Your teams’ use of AI translates into features arriving to production and the wider market faster, giving you a significant advantage over competitors when adopted successfully.

But it also means delivering more features in the same amount of time. AI’s impact as a force-multiplier isn’t restricted to speed, but should positively impact throughput, meaning deployment frequency should increase.

At the same time, however, AI should not decrease the stability of your product. With more code moving into production with an effective AI adoption process, a certain amount of instability may be considered within reason or statistically insignificant. But prolonged, consistent deterioration of your product’s stability likely indicates that the code AI is producing or the actions it’s taking during autonomous work needs review and iteration.

To understand the impact of AI agents on your overall efficiency, we recommend comparing execution time and time-to-market durations pre- and post-AI implementation.

An AI ROI framework for engineering teams

Outside of using the DORA framework to gauge throughput and stability, calculating the return on your investment into AI adoption is essential to understanding the full impact of your implementation.

Building on our earlier work measuring the ROI of GenAI, agentic AI must have an impact on your bottom line. To understand this impact, we recommend using metrics across three essential categories:

1. Usage and adoption

- AI agent throughput: How many tasks assigned are to AI agents per week across all engineering tasks (e.g., three AI agents write three net-new docs each, per day)

- AI agent utilization rate across teams: How many AI agents are used per team (e.g., the frontend team uses three agents per dev, but security uses two agents per dev)

- Token costs mapped to business outcomes: How many agents used how many tokens to complete PR review in a single week

2. Time impact

- Execution savings: How quickly direct tasks are completed (e.g., net-new documentation creation drops from hours to minutes)

- Flow efficiency: How the duration of wait states changes (e.g., PR review times drop from days to hours with AI-assisted reviews)

- Time savings: Hours saved × frequency × developer cost (e.g., if unit testing took three devs two hours per week, AI saves both the development time and cost)

3. Quality and trust

- Human intervention rate per AI workflow (e.g., how many times humans had to revert agent-produced code or reorient agents in workflows)

- Developer satisfaction surveys on AI assistance (e.g., has AI caused more frustration than helpful impact?)

- Success rate of AI-completed tasks (e.g., how often AI-produced code makes it into production)

How to measure the ROI of agentic workflows in Port

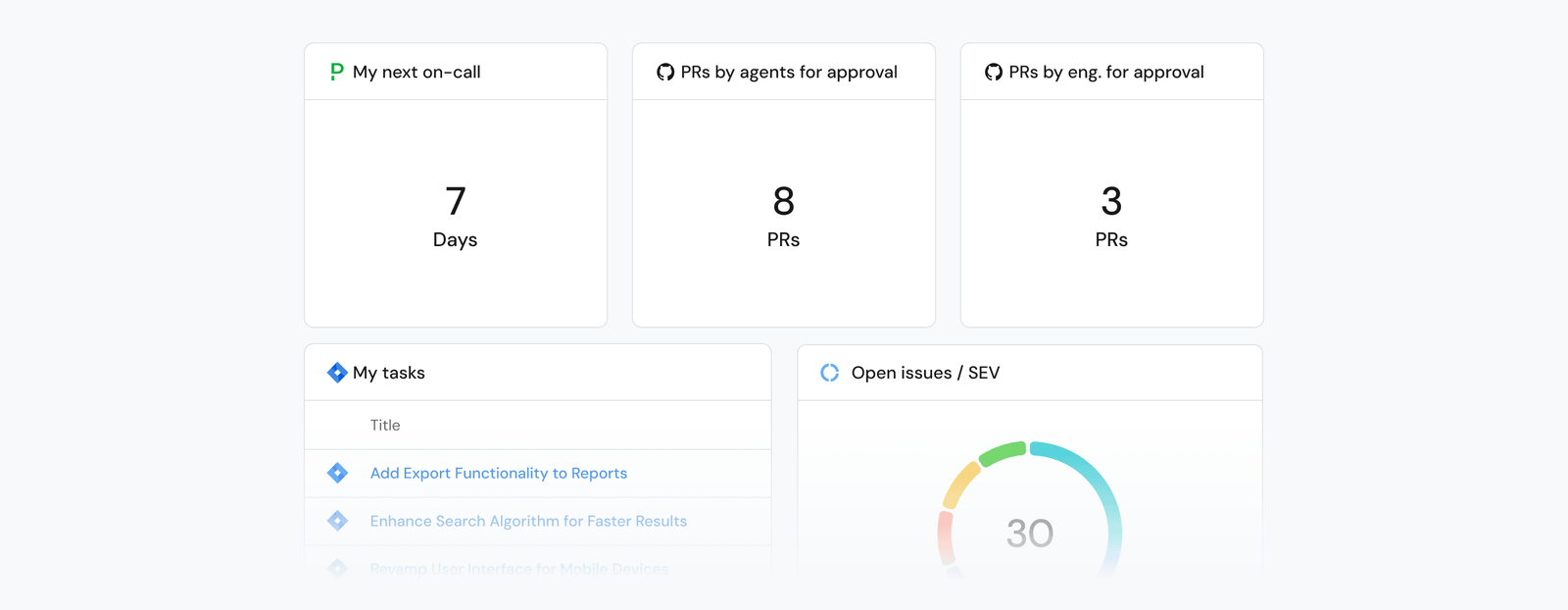

Alongside accelerating overall delivery speeds, Port also tracks and stores data related to all of the previously mentioned metrics categories. The Agentic Work Management solution is built to demonstrate your entire usage and adoption suite.

To take action against what you learn in Port, start by instrumenting your AI workflows with proper tracking. This pulls all relevant data into your Engineering Intelligence dashboard.

Through Port's audit logs, you can track data that answers questions like, “How many tokens did our AI agent consume to complete PR review?” and even query our native MCP server for answers.

These trace logs also capture agent activity, token usage, and business context, allowing you to connect AI costs directly to outcomes like “feature documentation” or “security review.”

This visibility enables you to benchmark agent adoption and scope improvements. For example, if documentation previously took a few days to create from end to end, you can now measure both the execution speedup and the overall delivery acceleration as separate metrics, which helps you understand the value AI provides.

Once you understand where you have bottlenecks, you can then consider creating governed agentic workflows to reduce those bottlenecks.

How to start measuring your agentic impact

To decide where to start building you can look at impact × effort × risk, and highlight high-ROI areas to start investing. It’s important to look for high-value, low-risk initiatives that you can iterate on a few times before launching to your wider team. Starting small helps you gain confidence when building new functionality, build trust with your teams and stakeholders, and measure changes in controlled environments.

Developers are likely already experimenting with AI, so ask your teams where they’ve found it most useful. Implementing that same change across teams is another great way to bolster your confidence and trust in AI across the board.

Port can further help you determine where those high-ROI spots are via surveys, and provides the platform where you can build and execute governed agentic workflows against them.

{{cta_8}}

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

n8n + Port templates you can use today

walkthrough of ready-to-use workflows you can clone

Further reading:

Learn more about Port’s Backstage plugin