Demystifying Kubernetes: CRDs and controllers for developers

Debunk common Kubernetes myths and see how the platform can not only be a container orchestrator but also a powerful, API-driven server. Take a look at how Port in turn enables seamless communication between custom resources and controllers within K8s.

This article is inspired by the talk Demystifying Why the World is Built on Kubernetes, originally given by Sébastien and Abby at KubeCon London in April 2025. You can watch the original talk here.

Product teams often struggle to extend the Kubernetes (K8s) API server with custom resources and controllers. Four common misconceptions about the K8s API exacerbate this issue, slowing custom resource management and hindering the developer experience (DevEx).

This article debunks these myths and unveils how K8s acts as both a container orchestrator and an API server. We then highlight how Port’s internal developer portal (IDP) guides platform engineers who control workloads, administrators who oversee infrastructure, and developers who extend the K8s API.

Four myths about extending Kubernetes with controllers

Myth 1: Controllers are only for native Kubernetes resources

Many engineers believe that controllers can only act on K8s resources like pods. In reality, they also connect with external APIs and bare metal (physical) servers. This flexibility boosts inter-system communications, accelerates workflows, eliminates manual processes, and elevates team productivity.

Myth 2: You can only write Kubernetes controllers in Go

False! You can write custom controllers or pods in K8s with many popular programming languages, including Python, Java, Node.js, and even shell scripts. Controllers interact with the K8s API server, which tracks your cluster resources, so you are not limited to Go.

The real issue emerges when you write K8s controllers in different languages without clear standards. You can enforce standards manually, but many teams choose to employ an IDP solution to build consistency across teams.

For instance, you can embrace Port’s IDP solution to:

- Specify the languages (e.g., Python, Java, and more) that developers employ to write controllers in your software catalog.

- Adopt scorecards to sustain consistency in the languages for writing quality controller code.

These features align engineers across disciplines and language expertise, even when developers work with unfamiliar languages.

Myth 3: You need to know everything before starting

The truth is that developers can learn and incrementally build K8s controllers, so they don’t need to become experts from the outset. We recommend that developers start small by writing a simple controller that excels at one action. This approach paves a clear path toward coding excellence within the K8s API server.

Myth 4: Building on Kubernetes is only for vendors

You are not limited to cloud providers, such as Microsoft Azure and AWS, to expand on your K8s instance. In reality, you can employ Custom Resource Definitions (CRDs) and custom controllers to extend your IDP and accelerate self-service operations.

The portal also makes K8s accessible to multiple teams across your organization, including developers, DevOps, and platform engineers, while controlling access and resource allocation.

What developers need to know about the Kubernetes API server

By demystifying these misconceptions, we showcase the true capabilities of K8s, revealing it as more than a container orchestrator.

Developers can employ K8s’s extensible API to automate workflows and control clusters. Here’s how the K8s API boosts the efficiency of multiple interaction models:

- DevOps and platform engineers run and monitor applications on K8s. They employ the kubectl command-line (CLI) tool to check logs, track container pods, and confirm that apps are running efficiently. They can initiate these activities by sending API requests directly to the K8s API server.

- Administrators shape infrastructure by setting up clusters, configuring nodes, and handling role-based access control (RBAC). Their goal is to stabilize environments and confirm that they perform effectively.

- Developers extend the functionality of K8s by building CRDs and writing custom controllers or operators that act on API events. Developers have the flexibility to customize K8s to meet specific infrastructure requirements and operational objectives.

What are Kubernetes custom resource definitions?

CRDs extend the K8s API using custom data models, so you can define unique resources alongside deployments, pods, and services. Think of CRDs as blueprints that describe the structure of your custom resources.

Here are the core components of the K8s CRD mechanism:

- Resource manifests define the desired state or spec of a resource using an OpenAPI schema. For instance, you can build a manifest to request “three replicas of this app” and incorporate it into your K8s clusters to boost resiliency.

- Native resources include deployments, pods, ingress, services, and CronJobs. These components are built-in and available out of the box in every K8s instance.

- Custom definitions introduce new resource types, or “kinds,” which you can then track and incorporate into K8s. These kinds are the new objects that you oversee and work with.

During CRD creation, you populate metadata fields such as “name,” “API version,” and “environment.” We recommend taking the time to build these identifiers at the beginning of your project to make it easy to oversee your custom resources.

Below is an example of a CRD schema:

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: widgets.example.com

spec:

group: example.com

versions:

- name: v1

served: true

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

dbName:

type: string

default: postgres

description: A database will be created with this name.

env:

type: string

default: dev

description: Prod gets backups and more resources.

enum:

- dev

- prod

scope: Namespaced

names:

plural: widgets

singular: widget

kind: WidgetExample: If you want to track PostgreSQL databases, build a CRD that includes fields such as name, version, and environment (dev or prod).

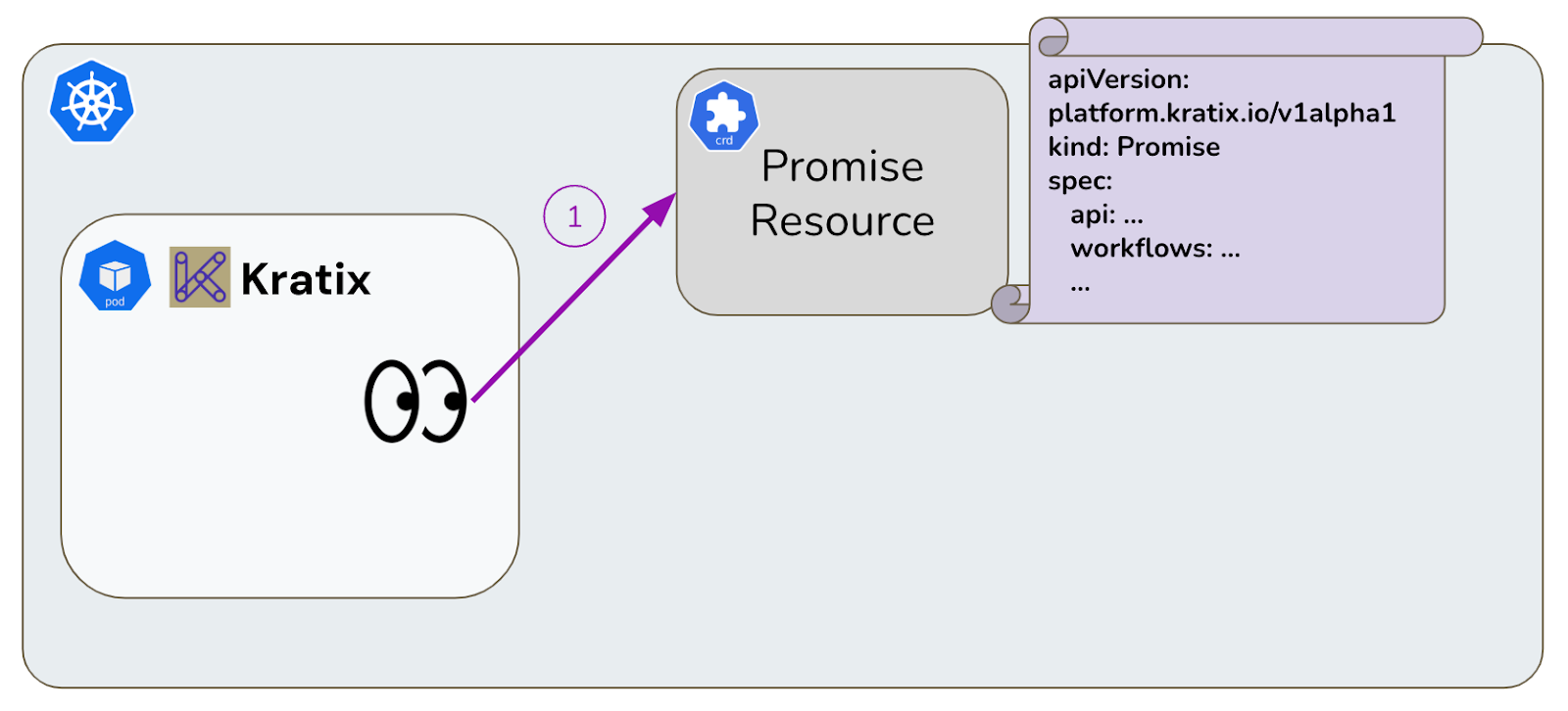

At this stage, developers can easily build and govern these custom resources on K8s using an open-source platform engineering framework like Kratix. This platform automates resource provisioning through reusable APIs.

How Kubernetes controllers work

After successfully defining CRDs, you can build custom K8s controllers. Custom controllers are proactive watchers that respond to anomalies in your resource clusters. They compare the current state of systems against desired performance so that they are controlled successfully.

{{cta-demo-baner}}

Accelerate workflows with Port’s API catalog

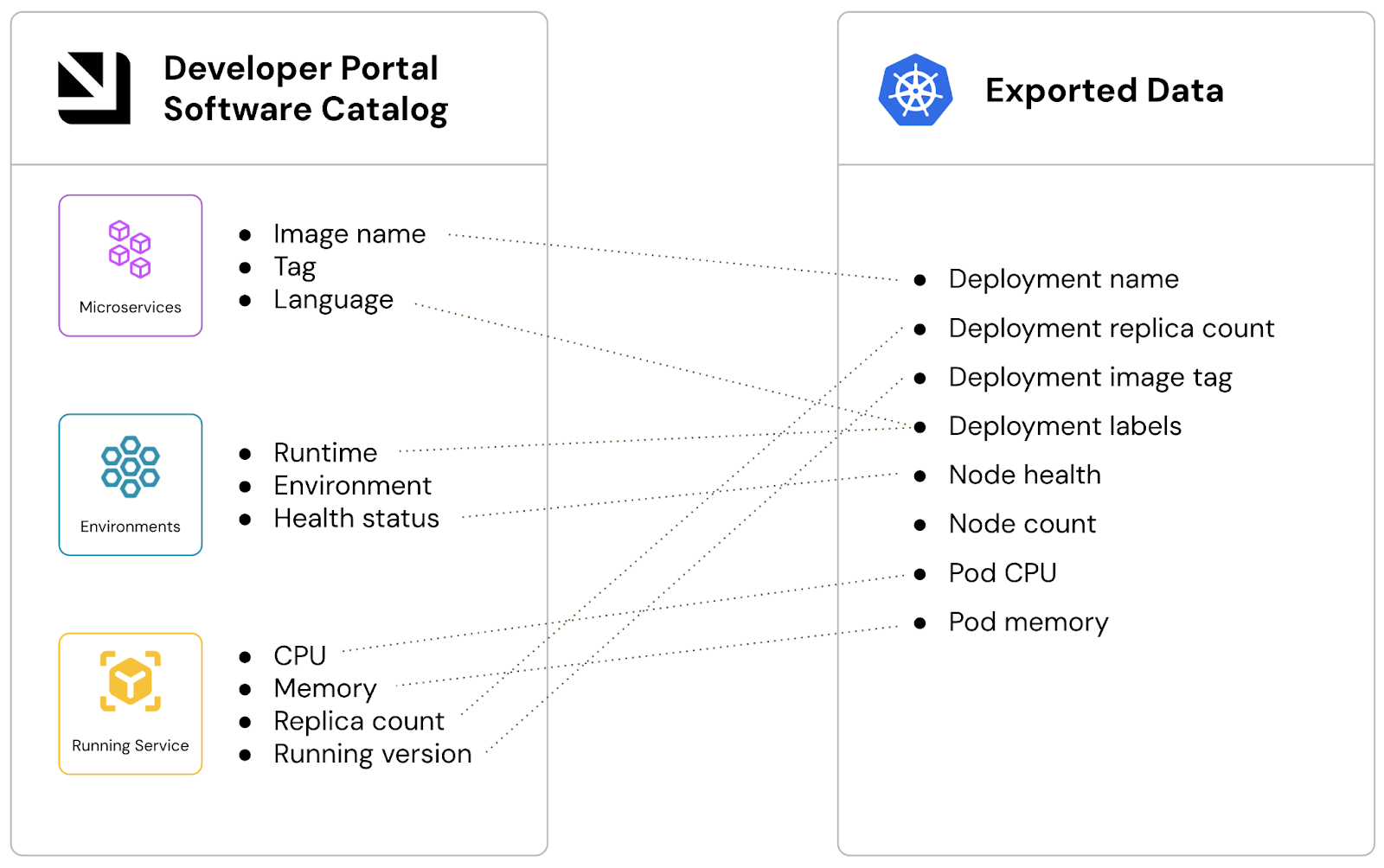

Now that we understand the capabilities of K8s controllers, let’s explore how Port’s IDP connects them to its software catalog through a single API.

Platform engineers can centralize all their developer tools, including K8s, within Port’s unified software catalog. This API catalog connects with K8s through CRDs and controllers, letting engineers:

- Access a single, reliable source of truth for K8s data. It breaks down silos, reduces cognitive load, and eliminates context switching.

- Incorporate K8s controllers to automate updates and oversee software, reducing manual efforts and accelerating deployment cycles.

- Boost visibility of clusters and cement consistency across environments.

- Trigger rollbacks when version issues emerge.

Teams can test, view, and measure the performance of their K8s objects, such as custom resources, apps, and services, directly within the portal. They evaluate metrics like service health and response time to track performance. Teams employ Port’s RBAC to restrict access to K8s resources, which strengthens internal security.

{{cta_1}}

Achieve more with the Kubernetes API server and Port

The K8s platform is much more than a container orchestrator. It provides an API server that developers can extend with custom data models and controllers.

Port’s IDP solution amplifies the power of the K8s API server. For instance, Xceptor, a data automation provider, accelerated its K8s implementation and aligned teams by adopting Port’s developer portal early. Custom abstraction layers mitigated complexity, elevating engineer productivity and focus. Explore Xceptor’s experience using Port to speed up their K8s adoption here.

Get your survey template today

Download your survey template today

Free Roadmap planner for Platform Engineering teams

Set Clear Goals for Your Portal

Define Features and Milestones

Stay Aligned and Keep Moving Forward

Create your Roadmap

Free RFP template for Internal Developer Portal

Creating an RFP for an internal developer portal doesn’t have to be complex. Our template gives you a streamlined path to start strong and ensure you’re covering all the key details.

Get the RFP template

Leverage AI to generate optimized JQ commands

test them in real-time, and refine your approach instantly. This powerful tool lets you experiment, troubleshoot, and fine-tune your queries—taking your development workflow to the next level.

Explore now

Check out Port's pre-populated demo and see what it's all about.

No email required

.png)

Check out the 2025 State of Internal Developer Portals report

No email required

Minimize engineering chaos. Port serves as one central platform for all your needs.

Act on every part of your SDLC in Port.

Your team needs the right info at the right time. With Port's software catalog, they'll have it.

Learn more about Port's agentic engineering platform

Read the launch blog

Contact sales for a technical walkthrough of Port

Every team is different. Port lets you design a developer experience that truly fits your org.

As your org grows, so does complexity. Port scales your catalog, orchestration, and workflows seamlessly.

Port × n8n Boost AI Workflows with Context, Guardrails, and Control

Book a demo right now to check out Port's developer portal yourself

Apply to join the Beta for Port's new Backstage plugin

n8n + Port templates you can use today

walkthrough of ready-to-use workflows you can clone

Further reading:

Learn more about Port’s Backstage plugin